Eric’s initial role at BSRC was in making sure this all ran smoothly: “I started out as a programmer, working on the server side – programs that take the data from the telescope and turn them into small chunks that can be processed by a personal computer. Since then I’ve gotten involved in more projects and come up with new ways to analyze the data. I started out with SETI@home. We were taking the data and doing analysis for narrow-band signals, and looking for the signature a signal would have if it drifted through the telescope field of view. Both had been done to some extent before, but SETI@home was different in that it looked for lots of different velocity or Doppler drifts. Previous instruments couldn’t do that because it took too much computation. But when you throw a million computers at it, it tends to become easier.”

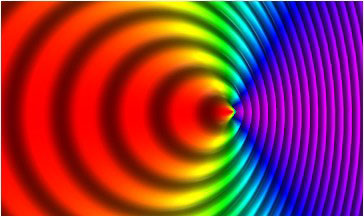

“Doppler drift” is akin to the change in pitch of a police siren as the vehicle moves towards and away from an observer. It’s one clue that a candidate SETI signal is not coming from a local transmitter that’s stationary relative to the telescope, but instead might be from a distant planet that’s moving relative to our own Solar System. “If a transmitter is on (or orbiting) a planet, and the planet is rotating, they are not moving at the same velocity with respect to us all the time,” explains Eric. “As the transmitter moves towards us, its signal moves up in frequency. As it moves away from us, it moves down in frequency. The standard detection techniques only look for things that are stable in frequency. So if you want to look for these signals that are drifting in frequency, you have to do a different type of analysis. We use a mathematical trick called a coherent dechirp algorithm. That was the revolutionary part of SETI@home. It takes around 30,000 times longer than it does to do just a single frequency analysis, but since we had lots of people interested and lots of computer power available, it worked out.”